Weekly AI Recap: Meta shares suffer, even as the company continues to ship new AI features

Plus, Microsoft and Apple scaled down the size of their AI systems (but not their AI ambitions) with new small language models.

Multimodal AI is now available on the Ray-Ban Meta Smart Glasses. / Adobe Stock TK

Meta shares drop following plans to continue to ‘invest aggressively’ in AI

Meta reported its earnings for the first quarter of 2024 on Wednesday, exceeding analyst expectations with $36.5bn in revenue.

Investors were clearly alarmed, however, by Meta’s plans to push more money into AI R&D – an area that Meta has been expanding into rapidly but which is not yet profitable for the company. According to CNBC, the tech giant’s stock fell by 10% on Thursday, marking its worst day since October 2022.

Meta chief financial officer Susan Li said during a call with investors on Wednesday that the company’s capital expenditures for 2024 will range between $35bn and $40bn as it continues to ramp up infrastructural investments in AI.

Li added: “We expect CapEx will continue to increase next year as we invest aggressively to support our ambitious AI research and product development efforts.”

Last week, Meta unveiled 8bn- and 70bn-parameter versions of Llama 3, the latest iteration of its open-source large language model. CEO Mark Zuckerberg told investors on the Wednesday call that the company is currently training a 400bn-plus-parameter version of Llama 3 that “seems on track to be industry-leading on several benchmarks.”

The Ray-Ban Meta Smart Glasses go multimodal

Speaking of Meta’s investments in AI, the company announced on Tuesday that multimodal AI had arrived on its Smart Glasses for all users.

The glasses, designed in collaboration with Ray Ban, were released last fall. An experimental multimodal AI feature was rolled out to a cohort of early testers earlier this year.

Multimodal AI models are capable of processing multiple forms of content, such as video, images, and text. Thanks to the technology, someone wearing the Meta Smart Glasses can now, for example, take a picture of a dog, ask the glasses to identify the breed, and a robotic voice (they come with built-in speakers that turn the glasses into open-ear headphones) will respond. Or they can take a photo and generate a social media caption for it. Users need only to prompt the glasses by saying: ”Hey Meta, look and...”

Meta’s announcement of the universal deployment of multimodal AI for its Smart Glasses arrives shortly after Humane’s AI Pin was scorched by early testers who reported a slew of technical problems.

Such reports did not bode well for the future of AI wearable technology – which is being positioned by some brands as the eventual successor to the smartphone – but Meta’s Smart Glasses hint at a possible new and more commercially successful path for the technology.

New ‘small language models’ hit the market

Big tech is starting to take a smaller approach to generative AI.

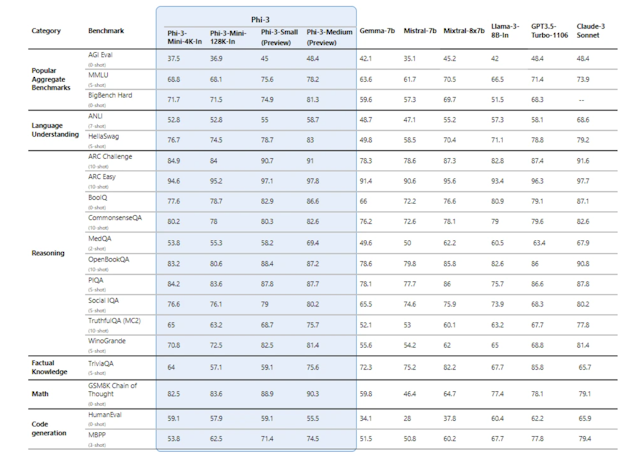

On Tuesday, Microsoft introduced its Phi-3 family of small language models – which, according to a company blog post, are ”designed to perform well for simpler tasks, are more accessible and easier to use for organizations with limited resources and ... can be more easily fine-tuned to meet specific needs.”

Phi-3 comes in three models: Mini, Small and Medium. Mini – which has just 3.8bn parameters and is, as you’ve probably deduced, the smallest of the three – is the only one that has yet to be publicly released.

Despite their comparatively small sizes, Microsoft reported that the three Phi-3 models either closely rivaled or outperformed leading LLMs like GPT-3.5 and Claude-3 Sonnet on a number of key industry benchmarks.

Their smaller size means that Phi-3 doesn’t always communicate as accurately or as fluidly as leading LLMs, but it also means that they’re less expensive to operate. Microsoft is betting that clients looking to offer AI tools to their customers will be willing to accept the trade-off.

On Wednesday, Apple followed up on Microsoft’s announcement by releasing eight small language models of its own.

The models, collectively called OpenELM, are reportedly compact enough to run on a smartphone, signaling possible future efforts from Apple to bring AI offerings directly to users’ individual devices. The OpenELM models are primarily in proof-of-concept form at the moment, according to Ars Technica.

Perplexity launches Enterprise Pro

On Tuesday, Perplexity announced the release of Enterprise Pro, the company’s first B2B offering.

Little more than a year old, the San Francisco-based startup has been making waves throughout Silicon Valley after receiving financial support from the likes of Jeff Bezos and being billed in the press as a potential challenger to Google, which has long hailed as the more or less unrivaled ruler of the online search industry.

Leveraging generative AI, Perplexity’s search engine responds to user queries by providing detailed but not overwhelmingly long text responses – including links to relevant sources – as opposed to an effectively bottomless list of internet links. The algorithm also encourages users to dig deeper by prompting them with relevant follow-up questions.

Perplexity is positioning Enterprise Pro as a more business- and IP-friendly alternative to other leading AI companies, like OpenAI, which are known to scrape copyrighted data in order to train their proprietary models.

”Enterprises feel comfortable that we’re not training our models based off of their prompts – it’s all kosher on that front,” Perplexity chief business officer Dmitry Shevelenko told The Drum in an interview. ”And so that will turbocharge usage even further.”

Enterprise Pro is now available for $40 a month or $400 a year for a seat.

Generative AI now being used for gene editing

Researchers are now using generative AI in an effort to edit DNA and develop more individualized treatments for certain illnesses and diseases, according to a report published on Monday by The New York Times.

Described in a research paper published on Monday by a Berkeley, California-based startup called Profluent, the technology is based on a method of bioengineering that was introduced with Clustered Regularly Interspaced Short Palindromic Repeats, or Crispr. Biochemists Emmanuelle Charpentier and Jennifer Doudna shared the 2020 Nobel Prize in Chemistry for their work on Crispr.

OpenCrispr-1, as the system is called, also harnesses neural networks to analyze huge quantities of genomic data and suggest new mechanisms for editing DNA.

For more on the latest happenings in AI, web3 and other cutting-edge technologies, sign up for The Emerging Tech Briefing newsletter.